When my system was installed, the batteries were Revov’s (an early model, not like the ones advertised now). Then last year I noticed what looked like a drop in performance of the batteries and made contact with Revov with the installer copied in. Long story short: I now have a Freedom Won 10/8.

Revov did various things including taking the battery away for testing. It passed, but read their warranty. They also said the settings were wrong (installer had followed their instructions, and I know better than to fiddle with that stuff). They started saying that the batteries had been charged at over 125A. This was a problem because my inverter can’t charge at that rate. It then emerged that they had swopped the BMS out. In fact over the 3.5 years I had those batteries, there were 3 BMS. At one point they showed me log entries that suggested the battery had been run with incorrect settings, but those log entries predated the installation… so who knows what useful information was being provided by that particular BMS.

Anyway… in all the disputes and toing and froing, the original question went unanswered.

I had mailed Revov two graphs from SEMS portal. Pointed out what looked like a drop in performance, and asked if this was normal. No pressure. No aggressive/sarcastic wording. If they’d had said yes, that’s about normal, I’d probably have chalked it up as a lesson learned, but they never got around to answering it.

So… just so I have one less thing to worry about at night, maybe somebody here can voice an opinion.

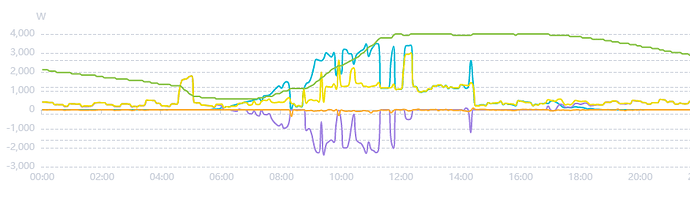

Here’s a graph from May 2020. The batteries have been at my property for about 11 months.

Notice the sudden kick up in load (yellow trace) at 4:30. That’s my heat pump. SOC at 4:30 is 60%. SOC (green trace) when the heat pump is done SOC is 53% and the drop in SOC is linear. 7% drop in SOC whilst the heat pump runs.

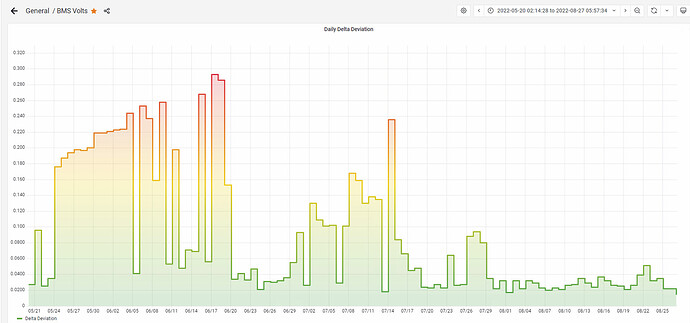

Now look at the second graph.

This is from May 2022. Heat pump now kicks in later because I’m working from home. SOC when the pump starts is 53%, No other heavy loads - just the pump and the fridges ticking over (as they do all night). SOC now drops very quickly and after 10 minutes it is 36% - 17% already and the pump is still running (and the system is starting to get some help from PV & grid).

So, I know that battery performance drops with time. I remind you of my original question: Is this it? Is this usual degradation?

I didn’t get an answer at the time. I would value opinions now.

PS: Hats off to my installer who couldn’t control Revov, but did interact with them on my behalf, and eventually arranged the deal whereby I ended up with the Freedom Won.